Text Collections

What is a text collection?

A text collection will ingest content into a vector database using the settings provided by the user. In Montag, this means it brings together four key concepts:

- An API Client: to generate vectors

- Embedding Settings: To specify how to generate vectors

- Model Roles: The roles to use for any AI functions being used to enhance context chunks

- Vector DB: To store context vectors

How do text collections work?

A text collection is executed when content is uploaded to it’s API endpoint. The content will be read and “chunked” into smaller components of a fixed number of sentences. The chunks are then run through a “summary” procedure (if specified) to enhance the content with metadata (keywords, titles, etc.).

The metadata is then added to each chunk to improve indexing and result relevance, and the chunk is then stored int the vector databases namespace. What is a namespace?

A namespace is a way to segregate content stored in a vector DB, essentially enabling to use one database for multiple content types. For example, you may be storing technical documentation alongside customer information. You will not want those two content types to disrupt one another, so namespacing keeps them separate without needing a whole new database.

What are the Summary Function map and Summary Template?

When chunking data, the data loses coherence between chunks when it is retrieved and passed to a language model. To improve this, Montag will automatically ensure there is a three-sentence overlap between chunks (so the end of one chunk repeats as the beginning of the next chunk). However we can go further than that with summary functions.

Montag can make use of “AI Functions” (one-off requests to a pre-prompted model) to generate specific types of metadata based on the original content.

For example, you may want to have each chunk have some front-matter that looks like:

TITLE: A title for the content, derived from the content

KEYWORDS: The top 5 keywords for the entire content block

QUESTION: The content, phrased as a question

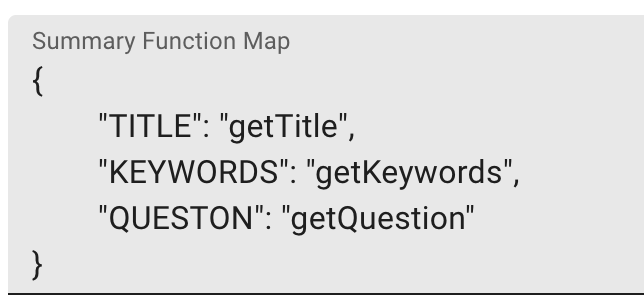

To make this front-matter, you would use the Prompt-tester in Montag to craft an AI function that provides each of these fields (let’s call them getTitle, getKeywords, and getQuestion), and then encode this as a JSON object in the Summary Function Map Field like so:

This will cause the text collection to call each of these named AI functions in order and then pass them to the summary template.

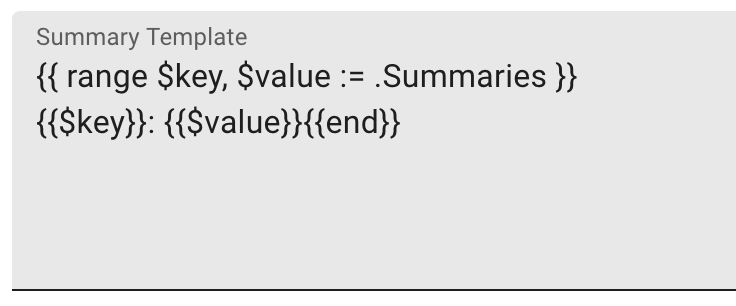

The summary template is a golang template that is used to structure the output from the summary function map. The outputs of each function replace the function name in the map, and so can be easily embedded like so:

How do I upload content to a Text Collection?

Learning plans take a zip file as a multipart upload to their API endpoint. The text collection can handle nested directories, so you can just zip up your content folder as-is. It’s recommended to trip content that is short or irrelevant to prevent it polluting your index.

Once you have your zip file, upload it using your auth-token as a header to the endpoint, like so:

curl -H @headers.txt \

-F file=@uploads/your-content.zip \

"https://Montag.your.server/api/corpus/4/upload"

If the command was successful, it will return an OK response with an ID:

{"ok":true,"run_id":"2030ed7c-e1f8-4d78-8edf-4c38b270c7f2"}

Make note of the run_id, as this can be used to monitor and resume the plan should something go wrong. What is the auth format?

The auth format uses a simple Bearer token with the JWT you get from logging into Montag: Authorization: Bearer Can I resume an upload or text collection if it crashes or stops for some reason?

Should the server crash, or something go wrong with the process, you can resume the upload by adding a ?resume<run_id> parameter to your request:

curl -H @headers.txt \

-F file=@uploads/tyk-operator.zip \

"https://Montag.your.server/api/corpus/4/upload?resume=2030ed7c-e1f8-4d78-8edf-4c38b270c7f2"

This will re-upload the file, but when it is processed, files in the zip already processed will be skipped by the ingesting function.

How do I know it worked?

All processed files are logged to the run_id, you can query this log using the API like so:

curl -H @headers.txt \

https://Montag.your.server/api/processlogs/2030ed7c-e1f8-4d78-8edf-4c38b270c7f2