AI Management for Platform Teams

Enable your teams to build AI-powered applications quickly, safely, and effectively.

Sign-up

We provide AI enablement all in one place, with our AI Developer Portal, and AI-as-a-Service platform capability.

Bring generative AI to your enterprise with confidence. Montag powers AI services within Tyk, and is used in production to drive productivity while providing safe and managed AI adoption.

Montag.AI provides a powerful self-service AI Developer Portal where your users can:

For administrators, it provides a secure way to ensure that your users can maximise productivity with AI, while ensuring that your data is secure

Montag.AI supports multiple AI vendors, including open source, and allows you to switch between them at will, dynamically.

For example, for highly-complex answers, you may wish to use GPT-4, however for sensitive tasks, you may want to direct a task at an internal open-source model.

Alternatively, you may want to ensure your chatbot, or smart-application, can handle long inputs, and so you may want to use a model that is optimised for long inputs when these arise, such as Anthropic Claude-2, without having to build your entire stack on a sinlge Vendor.

Montag makes switching between models while using the same prompt easy, and allows you to manage your AI models in one place without exposing credentials to your team.

Montag features a built-in slack integration and OpenAI chat API shim. We can have you set up with a GPT-powered chatbot infused with knowledge of your documentation, manuals and other data in minutes rather than weeks.

Montag.AI comes with it's own web-scraping engine, that can ingest any content on the web, give your bot access to content repositories such as ZenDesk, JIRA, and Confluence in a secure and self-managed way.

Upload a zip-file of your content, and Montag handles ingesting, chunking, vectorising, and enhancing your content for you, making it quick and easy to create AI-powered applications that are informed by your specialist knowledge domain.

Use Montag's powerful ingestion pipline and webhook support to send content into your vector database - e.g. as Github Actions, or CI/CD pipeline ops.

Montag.AI enables your team to create REST APIs that feed into pre-programmed AI prompts, like stored queries in a database and expose them as REST APIs. Make common AI use-cases as easy as a REST call.

Cmbine multiple LLMs, content repositories, and external content into more capable and specialised applications. Scripting enables your team to call on all the functionality within Montag from inside a robust and fast scripting language.

Scripts can be called from REST endpoints, intercept chatbot inputs, or intercept LLM outputs to enhance and enrich them. Scripts can also be used to create custom chatbot responses, or to create custom chatbot commands.

Build multi-modal, multi-vendor chatbots that can call on multiple content repositories to provide expert functionality to your users in a matter of minutes.

Montag.AI has a granular Access Control system system with support for OpenID-compatible external IDPs (SSO). Ensuring you can integrate Montag into your organisation quickly and securely without new user repositories.

Montag's granular Access Control capability enables you to create Workspaces for teams, and secure upstream AI vendor credentials and API secrets away from your AI Developers using per-object-type read, write, delete, and listing permission scopes.

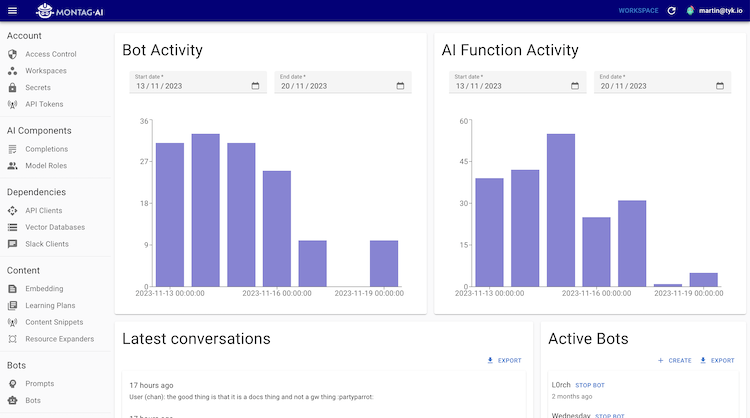

Montag has full analytics support, and records all inbound and outbound data in it's database with full chat logs. This ensures you have full visibility of what is being used, when, and by whom.

Deploy AI within your organisation securely, and enable your teams to innovate quickly and safely using strong governance and a service-based approach. Leverage Montag's AI Developer Portal to make AI accessible to your teams without compromising on security.

Sign upQuickly and easily deploy smart APIs enriched with the power of AI Models and your internal documentation and knowledge bases. Integrate with API Platforms like Tyk to productise and develop AI-powerd API products.

Sign upBuild AI-powered applications quickly using Montag's SDK and REST API without vendor lock-in and quirky API differences. Make LLM-backend swapping seamless and ensure you can easily use the best product for the job.

Sign upMontag.AI is provided as a self-managed application, and may be available as a SaaS offering in the future.

Montag requires minimal resources and will run on any standard linux-enabled cloud VM that can run Docker. Montag uses Postgres as it's preferred database, but can be deployed with SQLite for non-production uses.

No, Montag.AI enables access to Open Source LLMs by integrating with the OogBooga REST API, Ooga is a powerful LLM runner that is available on most popular GPU-cloud vendors.